Mastering Docker: A Step-by-Step Guide to Running PostgreSQL and pgAdmin

Table of Contents

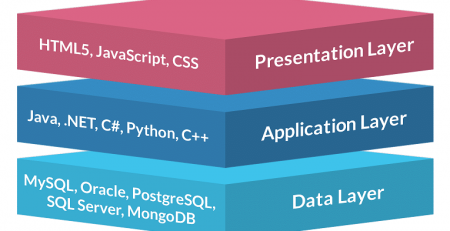

In the current dynamic software development landscape, containerization plays a crucial role in constructing, delivering, and executing applications. Docker, known for its user-friendly nature and versatility, has risen as a dominant platform for containerization. PostgreSQL holds a prominent position as a robust open-source relational database management system for database management tasks. Additionally, pgAdmin offers an intuitive interface for graphical administration of PostgreSQL databases. This detailed guide will delve into utilizing Docker to establish and oversee PostgreSQL and pgAdmin setups, facilitating the efficient streamlining of database operations.

Chapter 1: Understanding Docker

Docker has transformed the landscape of software development by providing a consistent environment for building, shipping, and running applications. In this chapter, we’ll explore the fundamental concepts of Docker through practical code examples.

1. Installing Docker

Let’s make sure Docker is installed on your PC before we get started. Docker offers installation packages for Windows, Linux, and macOS, among other operating systems. From the Docker website, download the installer that is compatible with your platform, then follow the installation guidelines.

Once Docker is installed, verify its version by running the following command in your terminal or command prompt:

docker --version

If Docker is installed correctly, you should see output displaying the installed version, confirming that Docker is ready to use.

2. Running Your First Container

Let’s start by running a simple containerized application. We’ll use the classic “hello-world” example provided by Docker to ensure that everything is set up correctly.

Run the following command in your terminal:

docker run hello-world

This command instructs Docker to download the “hello-world” image from Docker Hub (if it’s not already available locally) and run it as a container. You should see a message indicating that Docker is working correctly.

3. Exploring Docker Images

Docker images are the building blocks of containers. They contain the filesystem and configuration required to run a specific application. Let’s explore how to work with Docker images using some basic commands.

List all Docker images available locally:

docker images

This command will display a list of all Docker images stored on your system, along with their tags and sizes.

4. Building a Docker Image

You can create your own Docker images using Dockerfiles, which are text files that contain instructions for building the image. Let’s create a simple Dockerfile for a Node.js application.

Create a new directory for your project and navigate into it. Then, create a file named “Dockerfile” with the following content:

# Use the official Node.js image as a base FROM node:14 # Set the working directory in the container WORKDIR /app # Copy package.json and package-lock.json to the working directory COPY package*.json ./ # Install dependencies RUN npm install # Copy the rest of the application code COPY . . # Expose port 3000 EXPOSE 3000 # Command to run the application CMD ["node", "index.js"]

This Dockerfile specifies a Node.js application, sets the working directory, installs dependencies, exposes a port, and defines the command to run the application.

5. Building and Running the Docker Image

Now that we have created our Dockerfile, let’s build the Docker image and run a container based on it.

Navigate to the directory containing your Dockerfile and run the following command:

docker build -t my-node-app .

This command builds a Docker image named “my-node-app” based on the instructions in your Dockerfile.

Once the image is built, you can run a container using the following command:

docker run -p 3000:3000 my-node-app

This command starts a container based on the “my-node-app” image and maps port 3000 on the host to port 3000 in the container. You should now be able to access your Node.js application running inside the Docker container.

The fundamentals of Docker have been covered in this chapter, including installation, utilizing Dockerfiles to create custom images, and operating containers. A strong and adaptable platform for consistently and reliably creating and delivering applications is offered by Docker. We’ll look at using Docker to run PostgreSQL and pgAdmin, two critical tools for database administration and management, in the upcoming chapter.

Chapter 2: Setting Up PostgreSQL with Docker

PostgreSQL is a powerful relational database management system, and Docker provides an efficient way to deploy and manage PostgreSQL instances as containers. In this chapter, we’ll walk through the steps to set up PostgreSQL using Docker, complete with code examples.

1. Pulling the PostgreSQL Docker Image

The first step is to pull the official PostgreSQL Docker image from Docker Hub. This image contains the PostgreSQL server and its dependencies.

docker pull postgres

This command fetches the latest version of the PostgreSQL image from Docker Hub and stores it locally on your system.

2. Running a PostgreSQL Container

Once the PostgreSQL image is downloaded, you can run a PostgreSQL container using the docker run command. You can specify environment variables to configure the PostgreSQL instance, such as the password for the default user (postgres).

docker run --name my-postgres -e POSTGRES_PASSWORD=mysecretpassword -d postgres

Here’s what each part of the command does:

--name my-postgres: Assigns a name to the container.-e POSTGRES_PASSWORD=mysecretpassword: Sets the password for the default PostgreSQL user (postgres).-d: Runs the container in detached mode.

3. Verifying the PostgreSQL Container

To ensure that the PostgreSQL container is up and running, you can use the docker ps command to list all running containers.

docker ps

You should see an output similar to this:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2bf310d7e1e3 postgres "docker-entrypoint.s…" 3 seconds ago Up 2 seconds 5432/tcp my-postgres

This indicates that the my-postgres container is running, and PostgreSQL is accessible on port 5432.

4. Connecting to the PostgreSQL Database

You can connect to the PostgreSQL database running inside the container using various PostgreSQL client tools. For example, you can use the psql client to connect to the database.

docker exec -it my-postgres psql -U postgres

This command opens an interactive psql session as the postgres user in the my-postgres container.

5. Performing Database Operations

Once connected to the PostgreSQL database, you can perform various database operations. For example, you can create a new database using SQL commands.

CREATE DATABASE mydatabase;

This command creates a new database named mydatabase within the PostgreSQL server.

Conclusion

Setting up PostgreSQL with Docker is straightforward and efficient. By pulling the PostgreSQL image and running a container with the desired configuration, you can quickly deploy PostgreSQL instances for your development or production environments. In the next chapter, we’ll explore how to set up pgAdmin, a graphical administration tool for PostgreSQL, using Docker.

Chapter 3: Managing PostgreSQL with pgAdmin

pgAdmin is a popular open-source graphical user interface (GUI) for PostgreSQL that simplifies database administration tasks. By running pgAdmin in a Docker container, you can easily manage your PostgreSQL databases in a convenient and isolated environment. In this chapter, we’ll explore how to set up pgAdmin with Docker and demonstrate some common management tasks using code examples.

1. Pulling the pgAdmin Docker Image

First, let’s pull the official pgAdmin Docker image from Docker Hub.

docker pull dpage/pgadmin4

This command fetches the latest version of the pgAdmin image and stores it locally on your system.

2. Running a pgAdmin Container

Now, let’s run a pgAdmin container using the Docker command-line interface. We’ll specify environment variables to configure pgAdmin, including the login credentials.

docker run --name my-pgadmin -p 5050:5050 -e PGADMIN_DEFAULT_EMAIL=user@example.com -e PGADMIN_DEFAULT_PASSWORD=mysecretpassword -d dpage/pgadmin4

Here’s a breakdown of the command:

--name my-pgadmin: Assigns a name to the pgAdmin container.-p 5050:5050: Maps port 5050 on the host to port 5050 in the container, allowing access to pgAdmin’s web interface.-e PGADMIN_DEFAULT_EMAIL=user@example.com: Sets the default email address for logging in to pgAdmin.-e PGADMIN_DEFAULT_PASSWORD=mysecretpassword: Sets the default password for logging in to pgAdmin.-d: Runs the container in detached mode.

3. Accessing pgAdmin

Once the pgAdmin container is running, you can access it through a web browser by navigating to http://localhost:5050. Log in using the email address and password specified earlier (user@example.com and mysecretpassword, respectively).

4. Managing PostgreSQL Databases

With pgAdmin up and running, you can perform various database management tasks, such as creating databases, tables, and users, executing SQL queries, and managing data. Let’s demonstrate how to create a new database using pgAdmin’s web interface.

- Log in to pgAdmin through your web browser.

- In the navigation pane, expand the “Servers” node and select “Add New Server.”

- Enter the connection details for your PostgreSQL server, including the host, port, username, and password.

- Click “Save” to connect to the PostgreSQL server.

- Once connected, right-click on the “Databases” node and select “Create > Database.”

- Enter the name of the new database and any desired options, then click “Save” to create the database.

We have looked at using pgAdmin in a Docker container to handle PostgreSQL databases in this chapter. You can simply administrate your PostgreSQL databases using an intuitive web interface by obtaining the pgAdmin image and starting a container with the right setup. We’ll explore sophisticated Docker Compose setups to coordinate PostgreSQL and pgAdmin containers in the upcoming chapter.

Chapter 4: Configuring PostgreSQL and pgAdmin

Configuring PostgreSQL and pgAdmin involves setting up environment variables, defining volumes, and specifying network configurations to ensure seamless communication between the containers. In this chapter, we’ll explore how to configure PostgreSQL and pgAdmin using Docker Compose, a tool for defining and running multi-container Docker applications.

1. Creating a Docker Compose File

First, let’s create a Docker Compose file named docker-compose.yml in your project directory.

version: '3'

services:

postgres:

image: postgres

environment:

POSTGRES_PASSWORD: mysecretpassword

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

pgadmin:

image: dpage/pgadmin4

environment:

PGADMIN_DEFAULT_EMAIL: user@example.com

PGADMIN_DEFAULT_PASSWORD: mysecretpassword

ports:

- "5050:5050"

volumes:

pgdata:In this Docker Compose file:

- We define two services:

postgresfor PostgreSQL andpgadminfor pgAdmin. - For the

postgresservice: - We use the official PostgreSQL image.

- Set the

POSTGRES_PASSWORDenvironment variable tomysecretpasswordto secure the PostgreSQL instance. - Expose port 5432 to allow connections to PostgreSQL.

- Define a volume named

pgdatato persist PostgreSQL data. - For the

pgadminservice: - We use the official pgAdmin image.

- Set the

PGADMIN_DEFAULT_EMAILandPGADMIN_DEFAULT_PASSWORDenvironment variables for logging in to pgAdmin. - Expose port 5050 to access pgAdmin’s web interface.

2. Running the Docker Compose Configuration

To start PostgreSQL and pgAdmin using the Docker Compose configuration, run the following command in your terminal:

docker-compose up -d

This command reads the docker-compose.yml file and creates and starts the defined services in detached mode (-d).

3. Accessing pgAdmin

Once the Docker Compose configuration is running, you can access pgAdmin through a web browser by navigating to http://localhost:5050. Log in using the email address and password specified in the Docker Compose file (user@example.com and mysecretpassword, respectively).

4. Connecting to PostgreSQL from pgAdmin

To connect pgAdmin to the PostgreSQL database, follow these steps:

- Log in to pgAdmin through your web browser.

- In the navigation pane, expand the “Servers” node and select “Add New Server.”

- Enter the connection details for PostgreSQL, including the host (

postgres), port (5432), username (postgres), and password (mysecretpassword) specified in the Docker Compose file. - Click “Save” to connect to the PostgreSQL server.

In this chapter, we’ve configured PostgreSQL and pgAdmin using Docker Compose, defining environment variables, volumes, and network configurations. By orchestrating PostgreSQL and pgAdmin containers together, you can streamline database administration tasks and ensure seamless communication between the components. In the next chapter, we’ll explore best practices for managing Dockerized databases to maintain security and reliability.

Chapter 5: Advanced Docker Compose Configuration

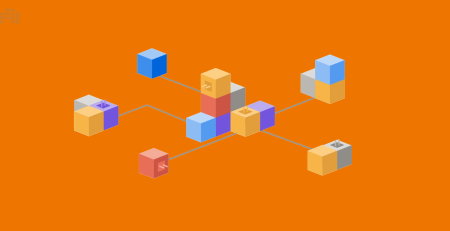

Docker Compose allows for sophisticated orchestration of multi-container Docker applications through a declarative YAML configuration file. In this chapter, we’ll explore advanced Docker Compose configurations for managing PostgreSQL and pgAdmin containers, including network configurations, environment variables, and service dependencies.

1. Network Configuration

By default, Docker Compose creates a default network for the services defined in the docker-compose.yml file. However, you can define custom networks to control communication between containers and improve security.

version: '3'

networks:

pg_network:

services:

postgres:

image: postgres

environment:

POSTGRES_PASSWORD: mysecretpassword

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

networks:

- pg_network

pgadmin:

image: dpage/pgadmin4

environment:

PGADMIN_DEFAULT_EMAIL: user@example.com

PGADMIN_DEFAULT_PASSWORD: mysecretpassword

ports:

- "5050:5050"

networks:

- pg_network

volumes:

pgdata:In this configuration, we define a custom network named pg_network and assign both PostgreSQL and pgAdmin services to this network using the networks key. This ensures that only containers within the pg_network network can communicate with each other.

2. Service Dependencies

In complex applications, certain services may depend on others. Docker Compose allows you to define dependencies between services to ensure that they start in the correct order.

version: '3'

services:

postgres:

image: postgres

environment:

POSTGRES_PASSWORD: mysecretpassword

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

pgadmin:

image: dpage/pgadmin4

depends_on:

- postgres

environment:

PGADMIN_DEFAULT_EMAIL: user@example.com

PGADMIN_DEFAULT_PASSWORD: mysecretpassword

ports:

- "5050:5050"

volumes:

pgdata:In this example, we specify that the pgadmin service depends on the postgres service using the depends_on key. This ensures that the PostgreSQL service starts before pgAdmin, allowing pgAdmin to connect to the PostgreSQL database upon startup.

3. Scaling Services

Docker Compose also supports scaling services to run multiple instances of a containerized application. This can be useful for horizontally scaling stateless services or performing load balancing.

version: '3'

services:

web:

image: nginx

ports:

- "80:80"

app:

image: myapp

ports:

- "3000:3000"

environment:

- NODE_ENV=production

scale: 3In this configuration, the app service is scaled to run three instances (scale: 3), allowing Docker Compose to create and manage multiple containers running the same application.

In this chapter, we’ve explored advanced Docker Compose configurations for managing multi-container Docker applications. By leveraging custom networks, service dependencies, and scaling capabilities, you can orchestrate complex application architectures with ease. These advanced configurations enable you to build scalable, resilient, and efficient Dockerized environments for your PostgreSQL and pgAdmin deployments. In the next chapter, we’ll discuss best practices for managing Dockerized databases to ensure security, performance, and reliability.

Chapter 6: Best Practices for Dockerized Databases

Dockerized databases offer flexibility and ease of management, but they also require careful consideration to ensure security, performance, and reliability. In this chapter, we’ll discuss best practices for managing Dockerized databases, focusing on PostgreSQL and pgAdmin, and provide code examples where applicable.

1. Use Official Docker Images

When deploying Dockerized databases, it’s best to use official Docker images provided by the database vendors. Official images are regularly updated, well-maintained, and come with security patches and performance improvements.

services:

postgres:

image: postgres

# Other configurations...In this example, we use the official PostgreSQL Docker image available on Docker Hub without modifications.

2. Set Strong Passwords

Always set strong and unique passwords for database users and administrative interfaces to prevent unauthorized access.

services:

postgres:

environment:

POSTGRES_PASSWORD: mystrongpasswordIn this example, we set a strong password for the default PostgreSQL user using the POSTGRES_PASSWORD environment variable.

3. Persist Data with Volumes

Use Docker volumes to persist database data outside of container filesystems. This ensures that data is not lost when containers are stopped or deleted.

services:

postgres:

volumes:

- pgdata:/var/lib/postgresql/data

volumes:

pgdata:In this example, we define a Docker volume named pgdata and mount it to the PostgreSQL container’s data directory.

4. Implement Backup Strategies

Regularly backup database data to prevent data loss in case of accidental deletion or container failure. You can use tools like pg_dump or third-party backup solutions.

docker exec my-postgres pg_dump -U postgres mydatabase > backup.sql

In this example, we use the pg_dump utility to create a backup of the mydatabase database.

5. Monitor Resource Usage

Monitor resource usage of Docker containers to ensure optimal performance and identify potential bottlenecks. Use tools like Docker Stats or container orchestration platforms to monitor CPU, memory, and disk usage.

docker stats my-postgres

In this example, we use the docker stats command to monitor resource usage of the PostgreSQL container named my-postgres.

6. Regularly Update Docker Images

Keep Docker images up to date by regularly pulling the latest versions from Docker Hub. Updated images often include security patches and bug fixes.

docker pull postgres:latest

In this example, we use the docker pull command to fetch the latest version of the PostgreSQL image.

7. Limit Container Permissions

Restrict container permissions to reduce the risk of container breakout attacks. Use Docker security features such as user namespaces, SELinux, or AppArmor.

services:

postgres:

security_opt:

- seccomp:unconfinedIn this example, we use the security_opt option to enable the seccomp:unconfined security profile for the PostgreSQL container.

8. Implement Access Controls

Implement access controls to restrict network access to database services and administrative interfaces. Use firewalls, network policies, or Docker network configurations to limit access to trusted sources.

services:

postgres:

ports:

- "5432:5432"

networks:

- trusted_network

networks:

trusted_network:

external: trueIn this example, we configure the PostgreSQL service to only listen on the trusted_network Docker network.

By following these best practices, you can ensure that your Dockerized databases are secure, performant, and reliable. Whether you’re deploying PostgreSQL, pgAdmin, or other database technologies in Docker containers, incorporating these practices into your workflows will help you maintain a robust and efficient database environment.

Leave a Reply