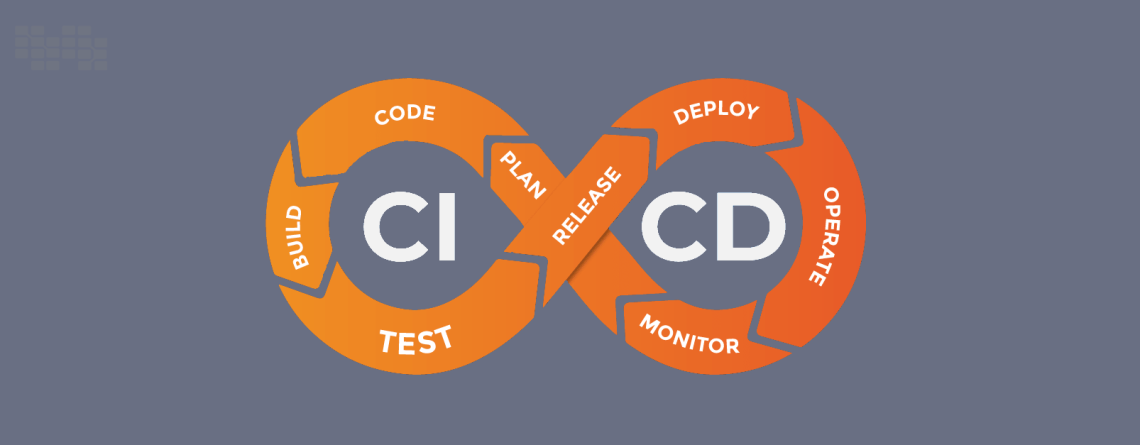

Getting Started With CI/CD Pipeline

The explosion of cloud computing has resulted in the rapid development of software programs and applications. The ability to deliver features more quickly is now a competitive advantage.

To accomplish this, your DevOps teams, structure, and ecosystem must be well-oiled. As a result, it is critical to understand how to construct an ideal CI/CD pipeline that will aid in the delivery of features at a rapid pace.

This blog post will go over key cloud concepts, execution playbooks, and best practices for setting up CI/CD pipelines on public cloud environments such as AWS, Azure, GCP, and even hybrid and multi-cloud environments.

Source Code:

This is where any CI/CD pipeline begins. This is where all of the packages and dependencies relevant to the application under development are classified and saved. At this stage, it is critical to have a mechanism in place that allows some project reviewers access. This prevents developers from arbitrarily integrating bits of code into the source code. It is the reviewer’s responsibility to approve any pull requests in order to move the code forward to the next stage. Although this requires the use of several different technologies, it will undoubtedly pay off in the long run.

Build:

When a change is committed to the source and approved by the reviewers, it moves automatically to the Build stage.

1) Gather Sources and Dependencies The first step in this stage is simple: developers must simply compile the source code along with all of its dependencies.

2) Unit Examinations This entails running a large number of unit tests. Many tools now indicate whether or not a line of code is being tested. The goal of creating an ideal CI/CD pipeline is to commit source code into the build stage with confidence that it will be caught in one of the later stages of the process. If high coverage unit tests are not run on the source code, it will proceed directly to the next stage, resulting in errors and forcing the developer to roll back to a previous version, which is often a painful process. As a result, it is critical to run a high coverage level of unit tests to ensure that the application is running and functioning properly.

Test Environment:

This is the code’s first environment. This is where the code changes are tested and confirmed to be ready for the next stage, which is closer to the production stage.

1) Integration Examinations. The most important prerequisite is to run integration tests. Although there are various interpretations of what an integration test is and how it compares to functional tests. To avoid this misunderstanding, it is critical to define the term precisely.

Let’s pretend there’s an integration test that runs a ‘create order’ API with an expected input. This should be immediately followed by a ‘get order’ API call, and the order should be checked to ensure that it contains all of the elements expected of it. If it does not, then something is wrong. If it does, the pipeline is functioning properly; congratulations.

Integration tests also examine the application’s behavior in terms of business logic. For example, if the developer enters a ‘create order’ API and the application has a business rule that prevents the creation of orders with dollar values greater than $10,000, an integration test must be performed to ensure that the application adheres to that benchmark as an expected business rule. It is not uncommon to conduct 50-100 integration tests at this stage, depending on the size of the project, but the focus of this stage should primarily be on testing the core functionality of the APIs and ensuring that they are working as expected.

2) Turn-On/Off Switches Let’s take a step back at this point to discuss an important mechanism that must be used between the source code and build stages, as well as the build and test stages. This mechanism is a simple on/off switch that enables or disables the flow of code at any time. This is an excellent technique for preventing source code that does not need to be built right away from entering the build or test stage, or for preventing code from interfering with something that is already being tested in the pipeline. This ‘switch’ allows developers to specify which items are promoted to the next stage of the pipeline.

Production:

Following testing, the production stage is usually the next step. However, moving directly from testing to production is usually only feasible for small to medium-sized organizations with only a couple of environments at most. However, as an organization grows in size, more environments may be required. This makes it difficult to maintain code consistency and quality across the environment. To manage this, code should be moved from the testing stage to the pre-production stage, then to the production stage. This is useful when many different developers are testing things at different times, such as QA or when a new specific feature is being tested. Developers can use the pre-production environment to create a separate branch or additional environments for running specific tests.

Pre-Production:

When moving code from the testing environment, it is critical to ensure that it does not cause a negative change in the main production environment, which houses all of the hosts and handles all of the customer traffic. The Pre-Production represents a subset of production traffic, ideally less than 10% of total production traffic. This enables developers to detect when something goes wrong while pushing code, such as when the latency is extremely high. This will set off the alarms, alerting the developers to the fact that a bad deployment is taking place and allowing them to roll back that specific change immediately.

The Pre-Production serves a straightforward purpose. If the code is moved directly from the testing stage to the production stage and fails, all other hosts using the environment must be rolled back, which is both tedious and time-consuming. However, if a bad deployment occurs in the Pre-Production, only one host must be rolled back.

This is a very simple procedure that is also very quick. The developer only needs to disable that specific host, and the previous version of the code will be restored in the production environment without causing any harm or changes. Although the concept is simple, the Pre-Production is a very powerful tool for developers because it adds an extra layer of safety when they make changes to the pipeline before it reaches the production stage.

1) Rollback Warnings Several things can go wrong in the deployment when promoting code from the test stage to the production stage. It can lead to:

A high number of errors

Increased latency

Key business metrics are changing.

Various unexpected and expected patterns

This makes it critical to incorporate the concept of alarms, specifically rollback alarms, into the production environment. Rollback alarms are a type of alarm that is integrated during the deployment process and monitors a specific environment. It enables developers to monitor specific metrics of a specific deployment and version of the software for issues such as latency errors or if key business metrics fall below a certain threshold.

The rollback alarm notifies the developer that the change should be rolled back to a previous version. These configured metrics should be monitored directly in an ideal CI/CD pipeline, and the rollback should be initiated automatically. The automatic rollback must be built into the system and activated whenever any of these metrics exceeds or falls below the expected threshold.

2) Bake Time. The Bake Period is more of a confidence-building step in which developers can look for anomalies. The ideal duration of a Bake Period is 24 hours, but it is not uncommon for developers to keep the Bake Period to 12 hours or even 6 hours during a high volume time frame.

When a change is introduced into an environment, errors may not appear immediately. Errors and latency spikes may be delayed, unexpected API behavior or a specific code flow of APIs may not occur until a specific system calls it, and so on. This is why the Bake Period is so crucial. It enables developers to be confident in the changes they’ve implemented. After the code has sat for the specified time and nothing abnormal has occurred, it is safe to proceed to the next stage.

3) Error Counts and Latency Breaches or Anomaly Detection Developers can use anomaly detection tools to find problems during the Bake phase, but this can be expensive for most businesses and is frequently an overkill solution. Simple monitoring of the error counts and latency breaches over a predetermined time period is another efficient option, similar to the one used earlier. The developer should revert to an earlier iteration of the code flow that was working if the total number of issues found is greater than a predetermined threshold.

4) Canary. A canary consistently tests the production workflow with expected input and expected output. Let’s think about the ‘create order’ API we previously used. The developer should configure a canary on that API in the integration test environment, along with a “cron job” that runs once every minute.

The cron job should be given the responsibility of keeping track of the create order API with hardcoded expected input and output. Every minute, the cron job needs to keep contacting or checking that API. This would enable the developer to be informed right away if the API starts to malfunction or if the API output returns an error, alerting them that something is wrong with the system.

The Bake Period, the key alarms, and the key metrics all need to incorporate the canary concept. The rollback alarm, which returns the pipeline to an earlier software version that was presumptively functioning flawlessly, is the point at which everything ultimately connects.

Main Production:

The code can advance to the main production environment at the end of the Pre-Production stage once everything is working as it should. For instance, the main production environment would host the remaining 90% of the traffic if the Pre-Production hosted only 10% of it. The stage must contain all the components and metrics used in the Pre-Production, including rollback alarms, the Bake Period, anomaly detection, error count and latency breaches, and canaries. These components and metrics must also be checked exactly as they were in the Pre-Production, only on a much larger scale.

How is 10% of traffic supposed to be directed to one host while 90% goes to another host? is the main challenge that the majority of developers encounter. While there are several ways to complete this task, moving it at the DNS level is the simplest. Developers can direct a portion of traffic to one URL and the remainder to another by using DNS weights. Depending on the technology being used, the process may differ, but DNS is the most popular and is typically preferred by developers.

Conclusion

An ideal CI/CD pipeline should make it possible for teams to quickly, accurately, completely, and reliably generate feedback from their SDLC. The software development process should be optimized and automated regardless of the CI/CD pipeline’s setup and tools.

Let’s go over the important points once more. The main ideas and components that constitute a perfect CI/CD pipeline are as follows:

- The Source Code is where all the packages and dependencies are categorized and stored. It involves the addition of reviewers for the curation of code before it gets shifted to the next stage.

- Build steps involve compiling code, unit tests, as well as checking and enforcing code coverage.

- The Test Environment deals with integration testing and the creation of on/off switches.

- The Pre-Production serves as the soft testing environment for production for a portion of the traffic.

- The Main Production environment serves the remainder of the traffic

Wherever you are in your DevOps journey, Nile Bits’ DevOps services are designed to deliver our signature exceptional quality while increasing efficiency. Whether you want to create a CI/CD pipeline from scratch, your CI/CD pipeline is inefficient and not producing the desired results, or your CI/CD pipeline is under development but needs to be accelerated, our reliable and distinctive engineering solutions will help your organization.

- Scale rapidly across locations and geographies,

- Quicker delivery turnaround,

- Accelerate DevOps implementation across tools.

Solving Problems in the Real World

We’ve used the recommended best practices mentioned in this article over the past few years.

Organizations frequently find themselves in need of support at various points along the DevOps journey; some want to create an entirely new DevOps approach, while others begin by looking for ways to improve their current systems and processes. Organizations must rethink their DevOps procedures as their products change and acquire new features, making sure that these changes don’t reduce their productivity or degrade the quality of their end product.

DevOps helps eCommerce Players to Release Features Faster

When it comes to eCommerce, DevOps is essential for boosting overall productivity, managing scale, and quickly implementing new and cutting-edge features.

Nile Bits developed the CI/CD pipeline for a global e-commerce platform with millions of daily visitors. An enjoyable online shopping experience is made possible by the efficient use of enormous computational resources. Numerous mission-critical tasks could be completed by the infrastructure with significant time and financial savings.

An ideal CI/CD pipeline is essential to the eCommerce sector because it can reduce resource costs by up to 40% while increasing developer throughput.

Robust CI/CD Pipelines are Driving Phenomenal CX in the BFSI Sector

DevOps has been more widely adopted across the BFSI industry with varying levels of maturity due to its ability to meet the constantly expanding user needs while also requiring the quick deployment of new features.

Nile Bits upgraded the entire infrastructure as part of a digital transformation project it was working on for a reputable bank with the goal of achieving continuous delivery. Emerging technologies like Kubernetes were incorporated into the process, allowing the institution to move at startup speed and accelerating the GTM by ten times.

DevOps & CI/CD pipelines are beginning to serve as the cornerstone of innovation for BFSI technology leaders as they seek to compete through digital capabilities.

A well-functioning DevOps team, ecosystem, and structure can make all the difference when it comes to generating business benefits and using technology to your advantage.

Start your DevOps journey right now!

Chat with us and let’s build.

Leave a Reply